by Anne Menendez | Jun 24, 2019 | Product News |

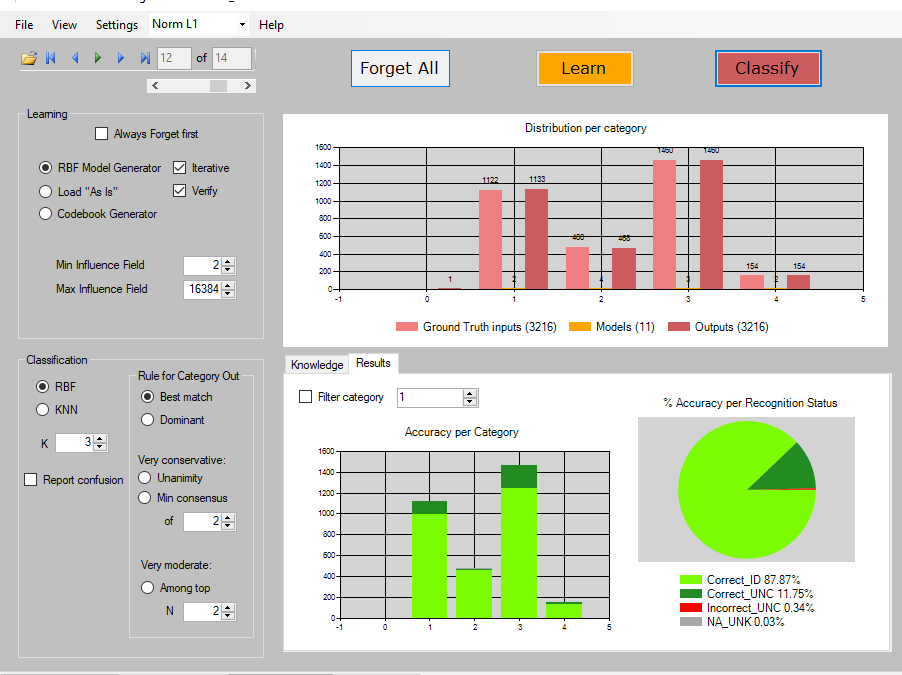

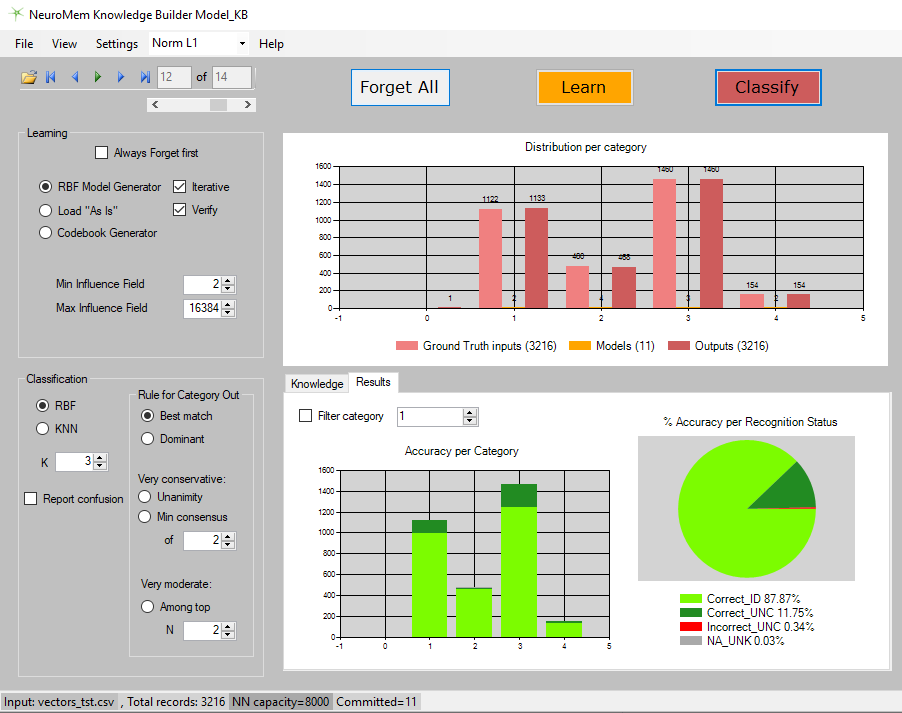

General Vision is introducing NeuroMem Knowledge Builder (NMKB) version 4.0 with new and improved functions including the ability to learn and classify multiple files in batch, the selection of new consolidation rules to waive uncertain classifications, and more.

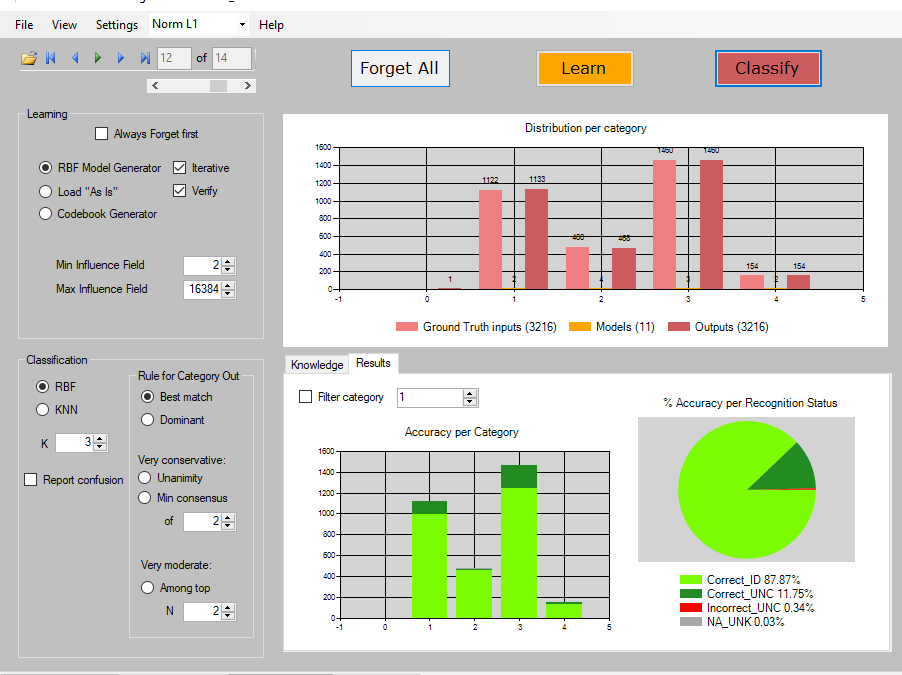

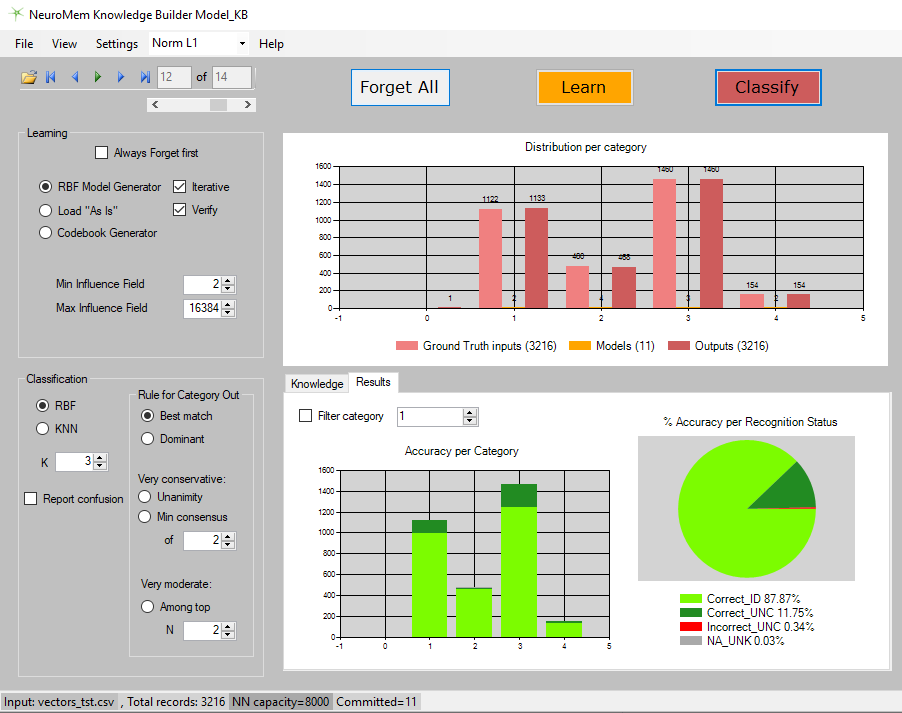

NeuroMem Knowledge Builder (NMKB) is a simple framework to experiment with the power and adaptivity of the NeuroMem RBF and KNN classifier. It lets you train and classify your datasets in a few mouse clicks while producing rich diagnostics reports to help you find the best compromise between accuracy and throughput, uncertainties and confusion, and more.

The application runs under Windows and integrates a cycle accurate simulation of 8000 neurons. It can also interface to the NM500 chips of the NeuroMem USB dongle (2304 neurons) and the NeuroShield board (576 neurons, expandable).

Simple toolchain for non AI experts

NMKB can import labeled datasets deriving from any data types such as text, heterogeneous measurements, images, video and audio files. The neurons can build a knowledge in a few seconds and diagnostics reports indicate if the training dataset was significant and sufficient, how many models were retained by the neurons to describe each category, and more. For the classification of new datasets, you can choose to use the neurons as a Radial Basis Function or K-Nearest Neighbor classifier. Other settings include the value K and a consolidation rule to produce a single output in case of uncertainties. The throughput and accuracy of the classification are reported per categories.

Building a knowledge with traceability

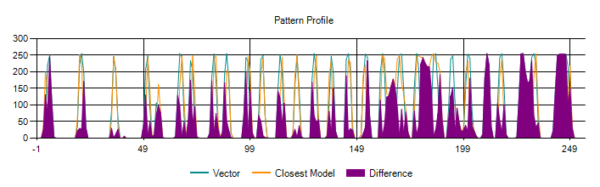

The application produces a data log to easily track and compare the settings and workflow which were tested on a given dataset. The traceability of the knowledge built by the NeuroMem neurons is conveniently exploited by NMKB. For example, you can filter the vectors classified incorrectly and comprehend why by comparing their profile to the firing models. This utility may even pinpoint errors in the input datasets!

Primitive and custom knowledge bases

Finally, the knowledge built by the neurons can be saved and exported to other NeuroMem platforms which can themselves use the knowledge “as is” or possibly enrich it if they are configured with a learning logic. A typical NeuroMem platform features a Field Programmable Gate Array (FPGA) and a bank of NM500 chips interconnected together either directly or through the FPGA along with the necessary GPIOs and communication ports for the targeted application. They all have in common that latencies to learn and recognize are deterministic and independent of the complexity of your datasets. The network’s parallel architecture also enables a seamless scalability of the network by cascading chips.

In addition to the NeuroMem Knowledge Builder, General Vision offers SDKs interfacing to NeuroMem networks for generic pattern recognition and image recognition with examples in C/C++, C#, Python, MatLab and LabVIEW.

by Anne Menendez | Feb 12, 2019 | Application Notes |

Smart sensors are driving the deployment of monitoring systems in our everyday lives from wearables tracking our mobility to complex sensor hubs ensuring the quality of a production line and the proper operation of its machinery. Their “smartness” comes from software running a pattern recognition engine and associated decision logic. In the case of predictive monitoring, the sensor needs to be paired with some higher intelligence capable of learning the novelties (objects or events) which are not recognized by the always-on recognition engine. Their detection cannot just call for a warning or actuation signal. Their recording would generate a large amount of redundant data and this solution is not practical for most sensory devices lacking storage capacity. Learning the novelties will enable the modeling of a spurious change, a temporary drift, or an irreversible trend, paving the way to intelligent decision-making.

This is where a NeuroMem® neural network becomes a real problem solver. Unlike a Deep Learning engine, this network will admit when it does not know, which is the information of interest in predictive maintenance. It will be capable of learning in real-time (including new categories) and classify novelties.

Read the full application note @

by Anne Menendez | Feb 12, 2019 | Application Notes |

Production and Quality Control managers are always interested in Machine Vision systems that can improve manufacturing processes, control the quality of products, and predict machinery maintenance before failure. They will have more faith in a system tested on their production line rather than in a laboratory setting. Still, their goodwill will shrink if the test must disrupt on-going manufacturing.

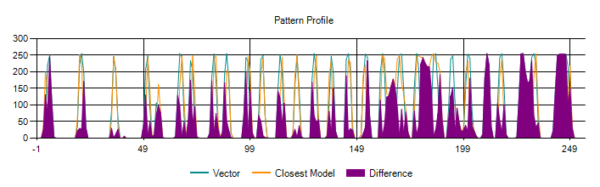

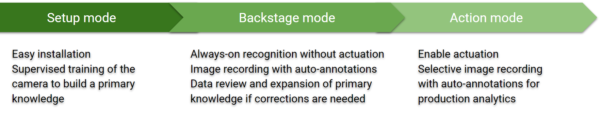

A General Vision smart camera powered by a NeuroMem neural network can be deployed in 3 easy non-intrusive steps and its training performed by the factory operators.

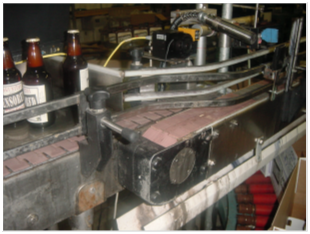

In the picture to the right, the camera is mounted on a rail along the conveyor belt and connected temporarily to a touch-screen to adjust the sensor settings and teach relevant examples of bottles passing by. The recognition starts immediately but solely at first to monitor and record the classification of the bottles. The annotations generated by the network can be corrected and used as new examples to teach the neurons. Once the classification is satisfactory over a reasonable production batch, the camera’s output lines can set enabled and actuate ejector, diverter, warning light, etc. The category recognized by the neurons becomes the action command.

Now that the non-disruption has been established, it is time to demonstrate to the complaisant manager that his operators can train the camera on their own and continuously fine-tune how the NeuroMem neural network must recognize or discriminate objects.

In the brewery, an operator with enough practice can predict if a bottle just filled with beer will end up with the proper amount of liquid once the foam has settled. Similarly, in a meat processing plant, a human inspector can grade the fat quality in the blink of an eye. The good news is that this field expert can transfer his “visual” knowledge by simply annotating images of products as they pass in front of the camera. There is no need to describe “why” an image is tagged with a certain category.

Read the complete application note @

by Anne Menendez | Dec 11, 2018 | Application Notes |

Industrial IOT is favoring RBF-type classification over Deep Learning for several essential reasons. First is the convenience to run tasks locally, without dependency to a remote server. Indeed an RBF classifier is a lifelong learner which can be taught incrementally and in real-time. Adapting to changes of production, new materials and other environmental conditions can be done immediately. This eliminates the need to send the new annotated examples to the cloud and wait for the generation of a new inference engine. Secondly, an RBF classifier does not report probabilities but rather prefer to enumerate multiple categories when the neurons do not reach a consensus. This behavior is much preferable when the application carries a cost of the mistake, whether it is for processing, quality control or predictive maintenance.

You can read more on this topic in an article by written Philippe Lambinet and published in the IIOT World magazine. Mr. Lambinet is the president of Cogito Instruments and has chosen to integrate NeuroMem RBF neural network chips in Cogito’s CompactRIO® cartridge compatible with the National Instruments® platform.